CloudVision Initial Configuration¶

CVP Version

This topology must be deployed with the CVP version set to CVP-2024.2.0-bare

Preparing The Lab¶

-

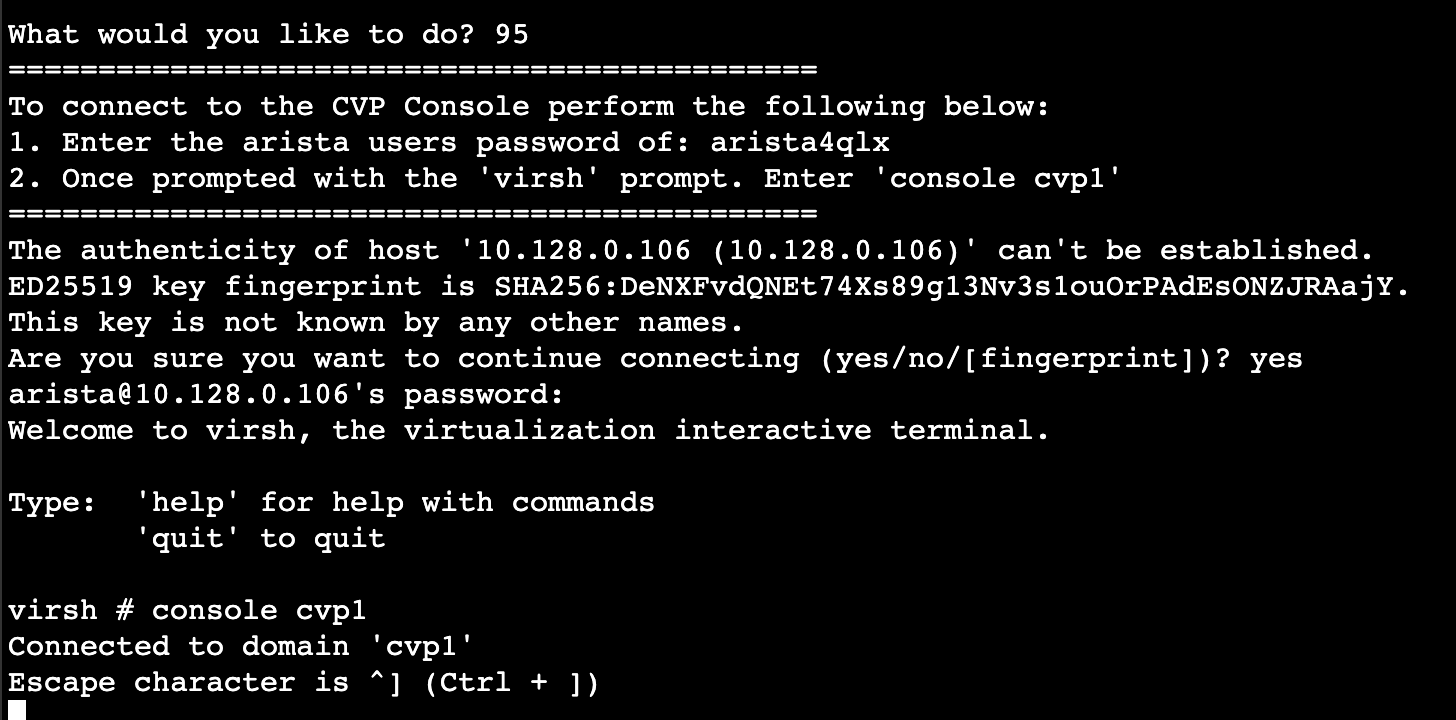

Log into the Arista Test Drive Portal by using SSH or by clicking on Console Access on the main ATD screen. Log in with the

aristauser and the auto-generated password. -

Once you’re on the Jump host, select option

98 SSH to Devices(SSH). -

Next, select

95 Connect to CVP Console (console). This will take you to the bash prompt for our jump host. -

Since CVP is not configured and doesn’t have an IP yet, we cannot SSH to it. Instead we need to create a Console connection to the VM by using the command console cvp1 at the

virsh #prompt. -

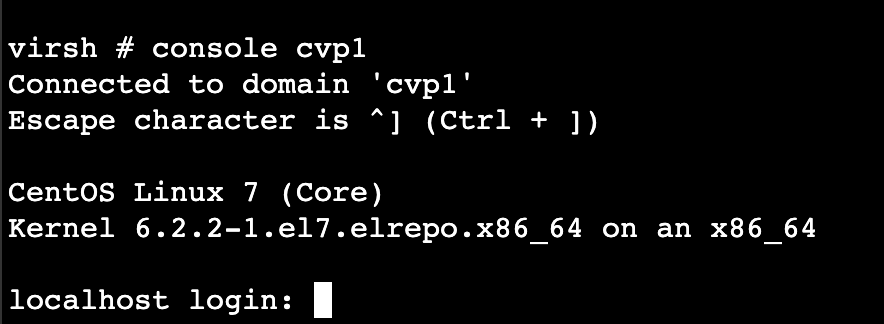

You should now see the

localhost login:prompt.

Lab Tasks¶

-

Log in using cvpadmin as the username. You will then be asked to set the root user password. Set this password to your lab credential password.

root Password

Ensure you set this to your lab credential password as you will need this during the upgrade lab to ssh to CVP as the root user.

CentOS Linux 7 (Core) Kernel 6.2.2-1.el7.elrepo.x86_64 on an x86_64 localhost login: cvpadmin Last login: Fri Aug 23 16:50:21 on ttyS0 Changing password for user root. New password: Retype new password: passwd: all authentication tokens updated successfully. CVP Installation Menu [q]uit [p]rint [s]inglenode [m]ultinode [r]eplace [u]pgrade > -

Since we are only setting up one CVP server, select s to choose singlenode.

-

You will now configure your CVP installation. Please refer to the following information.

-

CloudVision Deployment Model:- This is to select whether you would like a default deployment or if you would only like to install wifi-analytics. For this lab, we will select d for default. -

DNS Server Addresses (IPv4 Only):- comma-separated list of DNS servers. 192.168.0.1 will be acting as our DNS Server, NTP Server, and Default Gateway in our lab. 192.168.0.1 is the IP of our lab jump host that we used to access the CVP VM. -

DNS Domain Search List:comma-separated list of DNS domains on your network. We will set this to atd.lab. -

Number of NTP Servers:- Allows you to specify how many NTP servers you have in your environment. We set this to 1 for this lab. -

NTP Server Address:- comma separated list of NTP servers. enter 192.168.0.1 for this lab. -

Is Auth enabled for NTP Server #1:- allows you to optionally set authentication parameters for NTP servers in your environment. We do not use authentication, so we will keep the default N value. -

Cluster Interface Name:- Allows you to specify a cluster interface name, This is typically left as the default value. -

Device Interface Name:- Allows you to specify a device interface name, This is typically also left as the default value. -

CloudVision WiFi Enabled:- This should be enabled if you are deploying Access Points in your environment. For our lab scenario, we will select the default value N. -

Enter a private IP range for the internal cluster network (overlay):- This is the private IP range used for the kubernetes cluster network. This value must be unique; must be /20 or larger; shouldn’t be link-local, reserved or multicast. Default value is 10.42.0.0/16. We will accept this default for our lab. -

FIPS mode:- Leave the default of no. -

Hostname (FQDN):- This will be the URL you enter to access CloudVision once the deployment is complete. We will set this to cvp.atd.lab for this lab. -

IP Address of eth0:- This will be the IP address of this node. We set this to 192.168.0.5 in this lab. -

Netmask of eth0:- Our example will be a /24, so we set this to 255.255.255.0. -

NAT IP Address of eth0:- This would be set if you are using NAT to access CVP. For the purposes of this lab, we will leave this blank. -

Default Gateway:- Set this to 192.168.0.1 (our lab jump host). -

Number of Static Routes:- Leave blank. -

TACACS Server IP Address:- Leave blank.

Enter the configuration for CloudVision Portal and apply it when done. Entries marked with '*' are required. Common Configuration: CloudVision Deployment Model [d]efault [w]ifi_analytics: d DNS Server Addresses (IPv4 Only): 192.168.0.1 DNS Domain Search List: atd.lab Number of NTP Servers: 1 NTP Server Address (IPv4 or FQDN) #1: 192.168.0.1 Is Auth enabled for NTP Server #1: N Cluster Interface Name: eth0 Device Interface Name: eth0 CloudVision WiFi Enabled: no *Enter a private IP range for the internal cluster network (overlay): 10.42.0.0 /16 *FIPS mode: no Node Configuration: *Hostname (FQDN): cvp.atd.lab *IP Address of eth0: 192.168.0.5 *Netmask of eth0: 255.255.255.0 NAT IP Address of eth0: *Default Gateway: 192.168.0.1 Number of Static Routes: TACACS Server IP Address:Other Options

There may be options listed on your deployment that do not exist in this guide. If you encounter this, just accept the default value for that field. All of these settings are saved in the

/cvpi/cvp-config.yamlfile -

-

You can now select p to view the output of the

/cvpi/cvp-config.yamlfile. Then select v to verify the configuration. Your output should look similar to this:>p common: cluster_interface: eth0 cv_wifi_enabled: 'no' deployment_model: DEFAULT device_interface: eth0 dns: - 192.168.0.1 dns_domains: - atd.lab fips_mode: 'no' kube_cluster_network: 10.42.0.0/16 ntp_servers: - auth: n server: 192.168.0.1 num_ntp_servers: '1' node1: default_route: 192.168.0.1 hostname: cvp.atd.lab interfaces: eth0: ip_address: 192.168.0.5 netmask: 255.255.255.0 version: 2 >v Valid config format. Applying proposed config for network verification. saved config to /cvpi/cvp-config.yaml Running : cvpConfig.py tool... Stopping: network Running : /bin/sudo /bin/systemctl stop network Running : /bin/sudo /bin/systemctl is-active network Running : /bin/sudo /bin/systemctl is-active network Starting: network Running : /bin/sudo /bin/systemctl start network [ 5802.296672] warning: `/bin/ping' has both setuid-root and effective capabilities. Therefore not raising all capabilities. Valid config. -

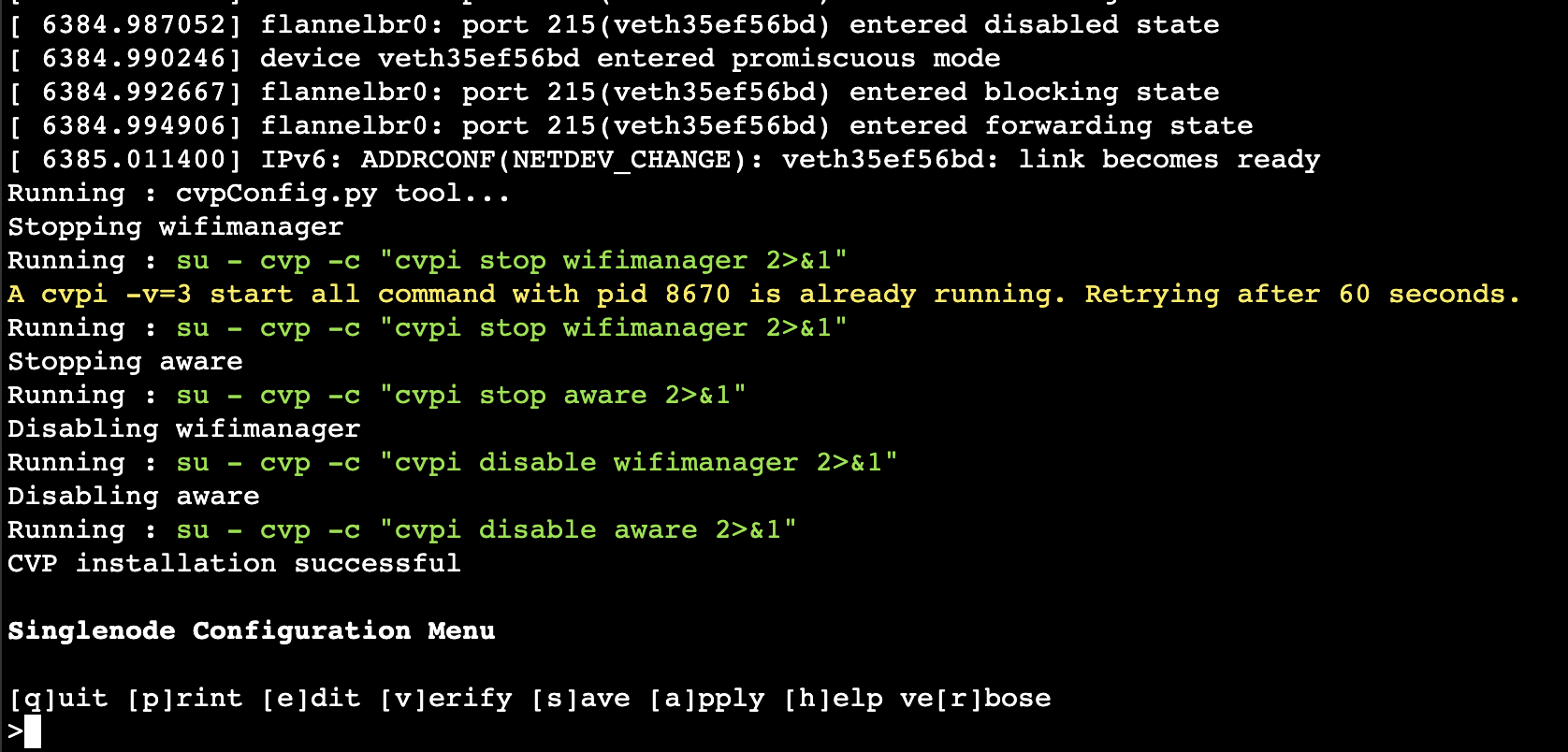

Finally, enter a to apply the changes and begin CVP installation.

-

You should now see the installation running and a lot of scrolling text. This should take about 10 minutes to complete.

-

When you see CVP installation successful and the configuration menu on the screen again, we know that CVP has been configured successfully. Go back to the main ATD screen and click on the CVP link.

-

On the login screen, use cvpadmin as the username and cvpadmin as the password.

-

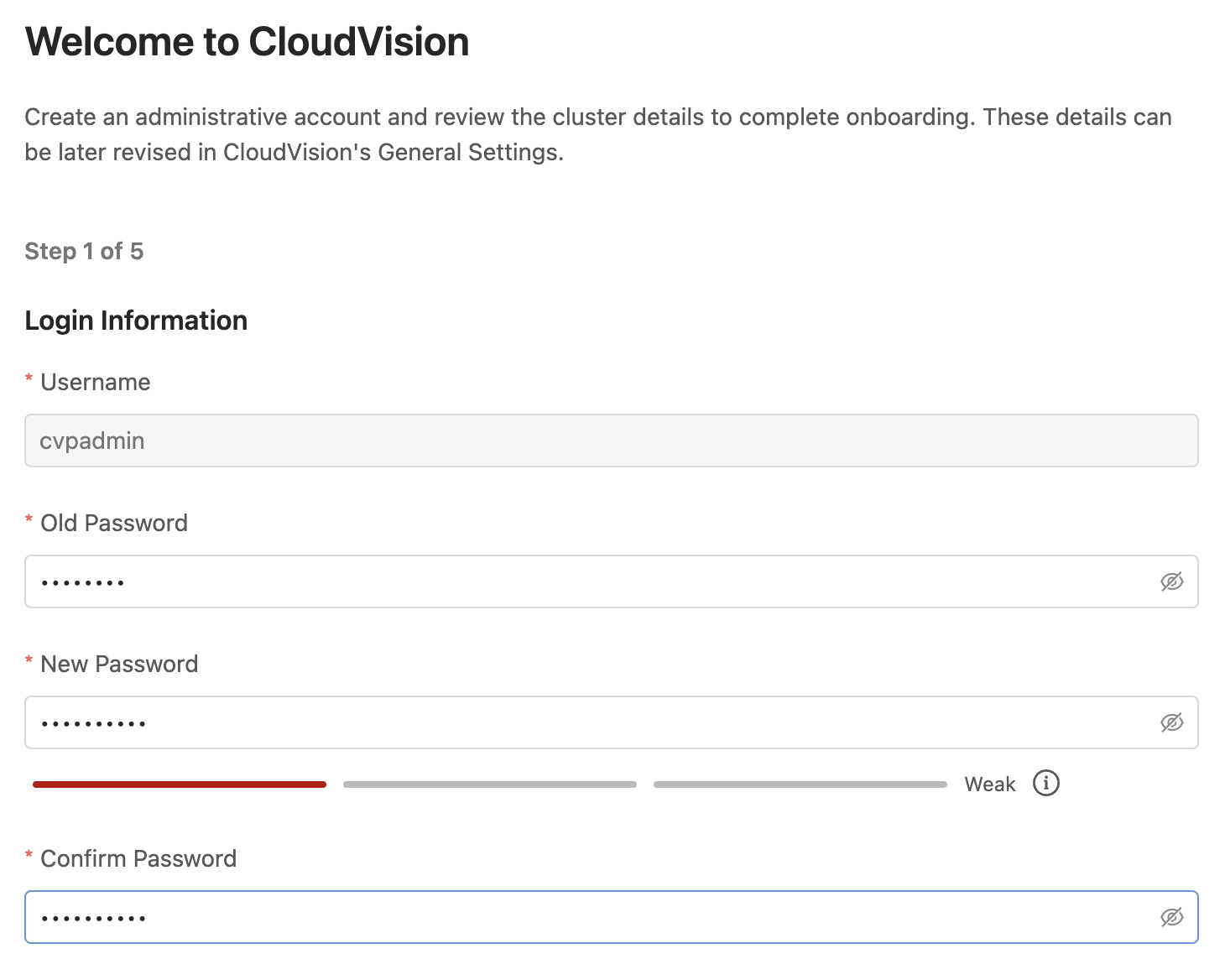

You will then be asked to complete the initial user setup steps. Fill out the information as below.

-

Step 1

-

Username: cvpadmin -

Old Password: Whatever you set for step 1. -

New Password: Your ATD Lab Credentials password.

-

-

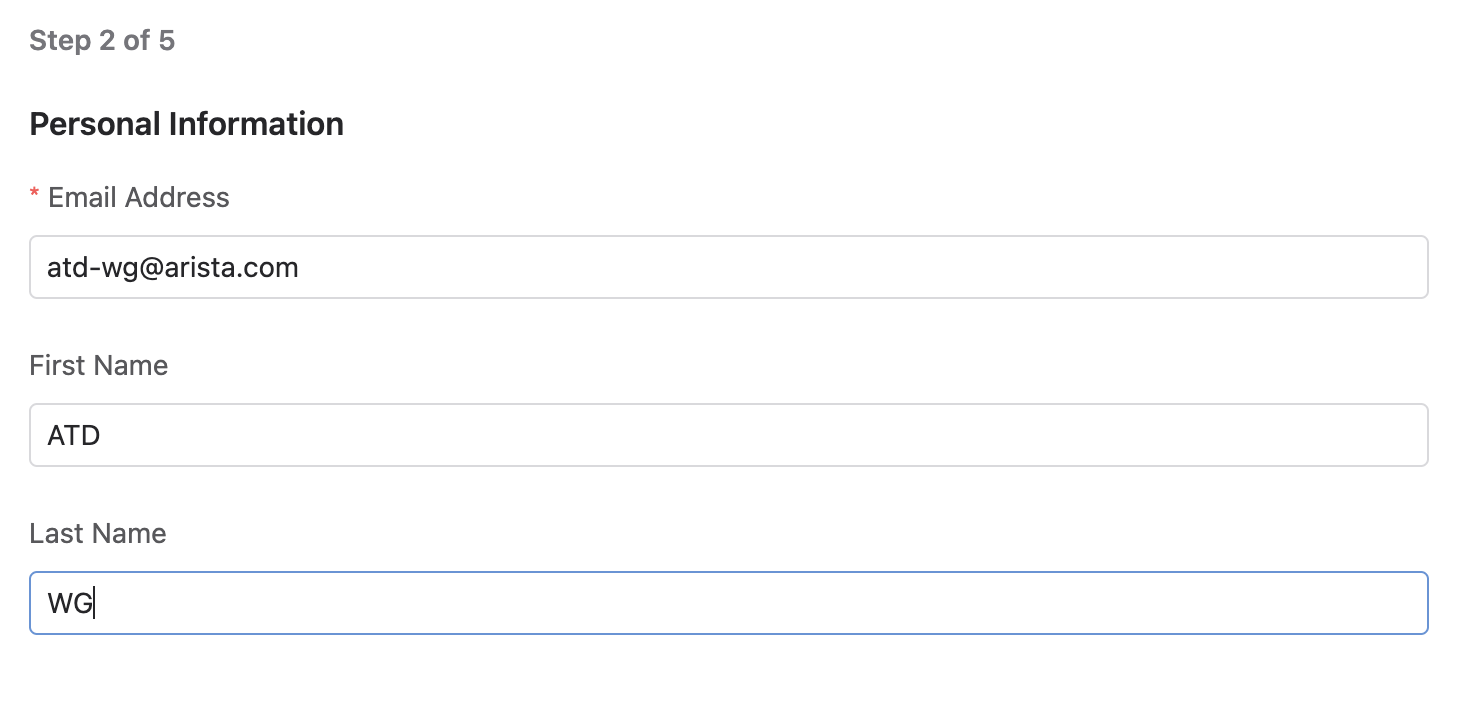

Step 2

-

Email Address: Anything or atd-wg@arista.com -

First Name: A first name. -

Last Name: A last name.

-

-

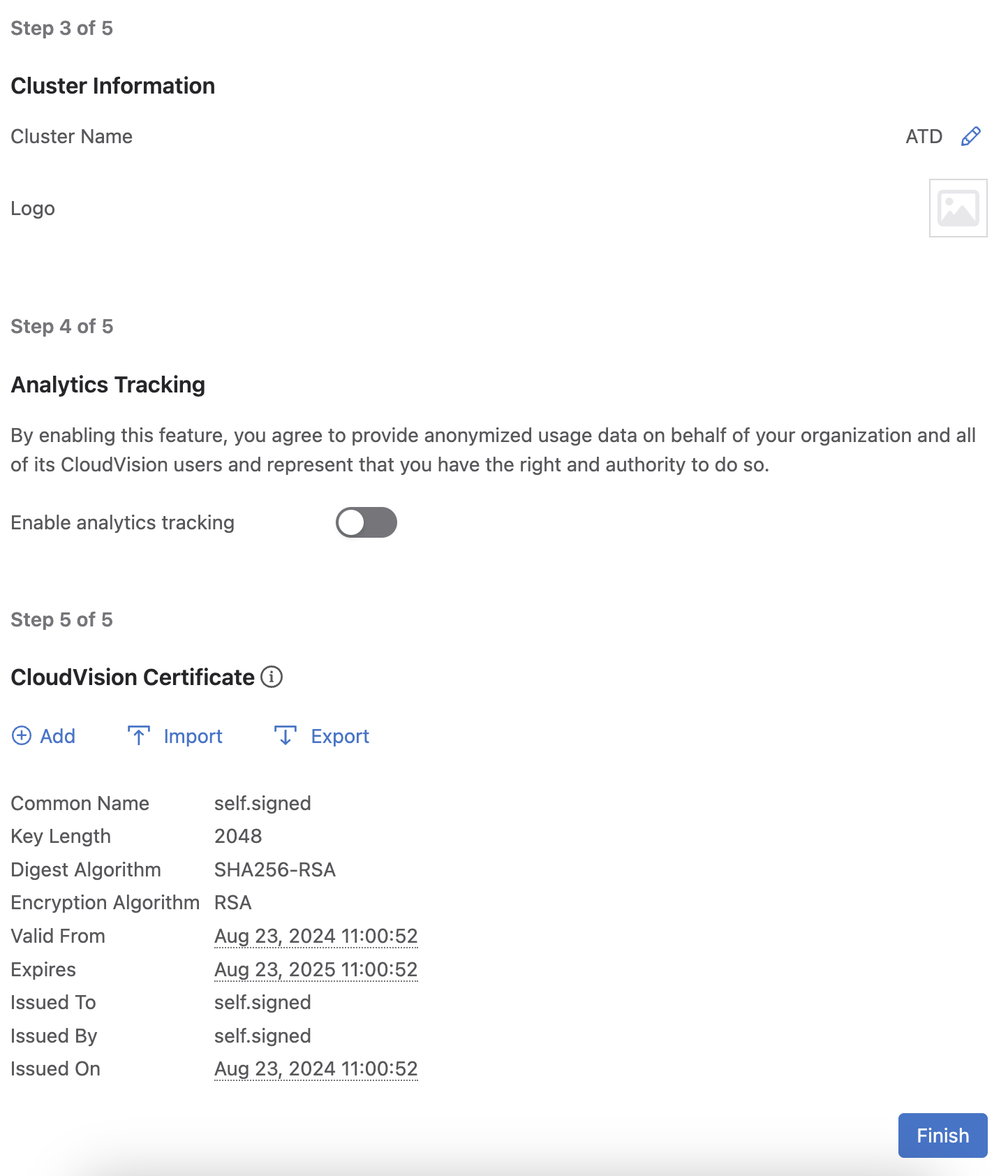

Steps 3 - 5

-

Cluster Name: Whatever you would like. -

Analytics Tracking: Leave off. -

Cloudvision Certificate: Leave the default self-signed cert.

-

-

Click Finish.

-

-

Log into CVP one more time and you’ll be greeted by the Devices screen. You have now installed and configured CVP Successfully!

-

Now lets set up network-admin and network-operator accounts.

-

Click on the gear icon for Settings in the lower left corner.

-

Select Users under Access Control.

-

Click + Add User

-

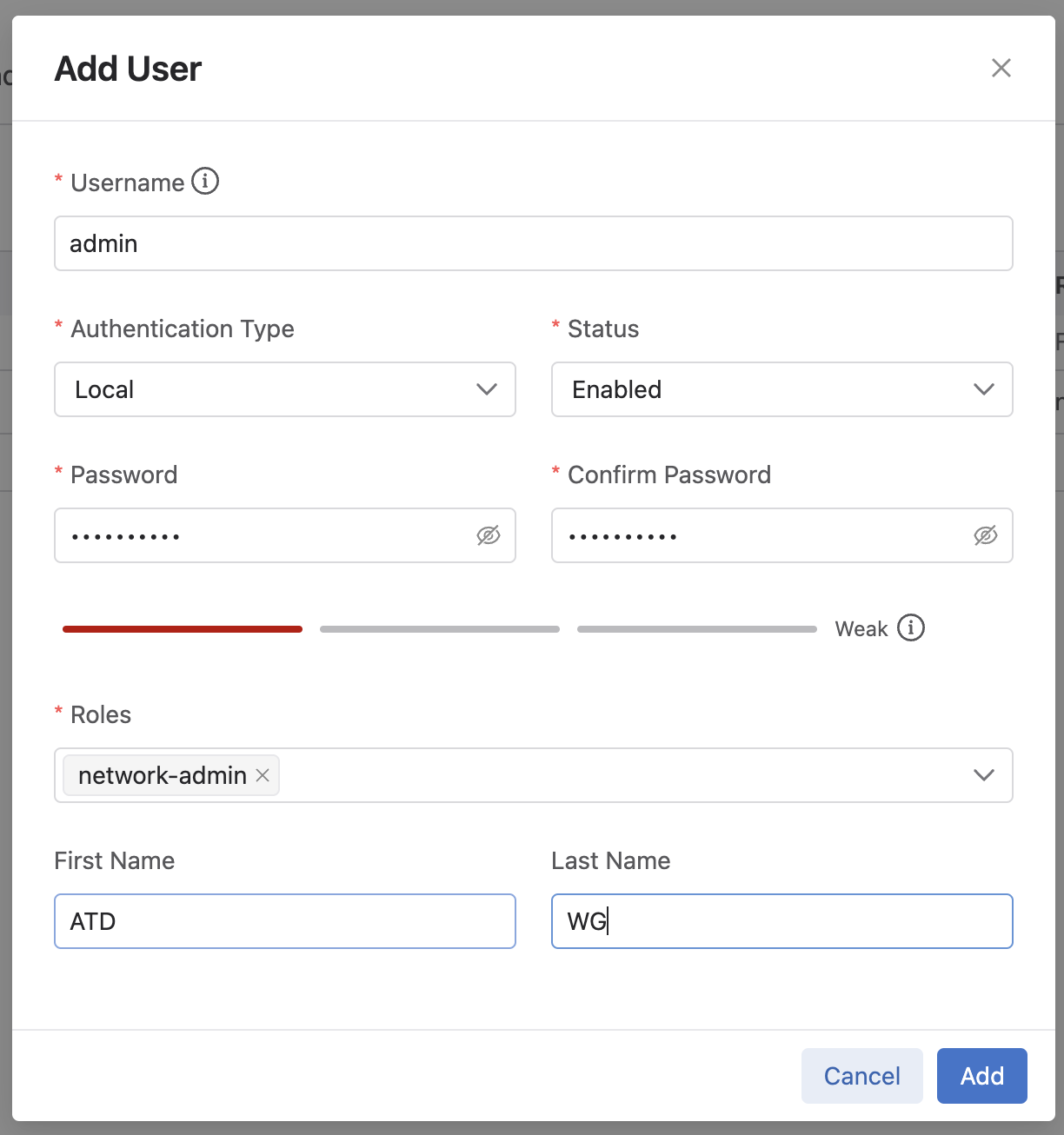

Fill out the Add User screen.

-

Username: admin -

Authentication Type: Local -

Status: Enabled -

Password: Lab credential password from ATD dashboard. -

Roles: network-admin -

First Name: A first name. -

Last Name: A last name.

-

-

Click Add.

-

Follow this step again, but select network-operator to set up the network-operator account.

-

Additional Tasks¶

This additional bonus task requires an Arista.com account.

We can now subscribe to bug alerts, so that CVP will populate compliance data automatically on the Compliance Overview screen.

-

Click here to browse to Arista.com and log in.

-

Once logged in, click on your name on the top bar and select My Profile.

-

Copy your Access Token listed at the bottom of the page.

-

-

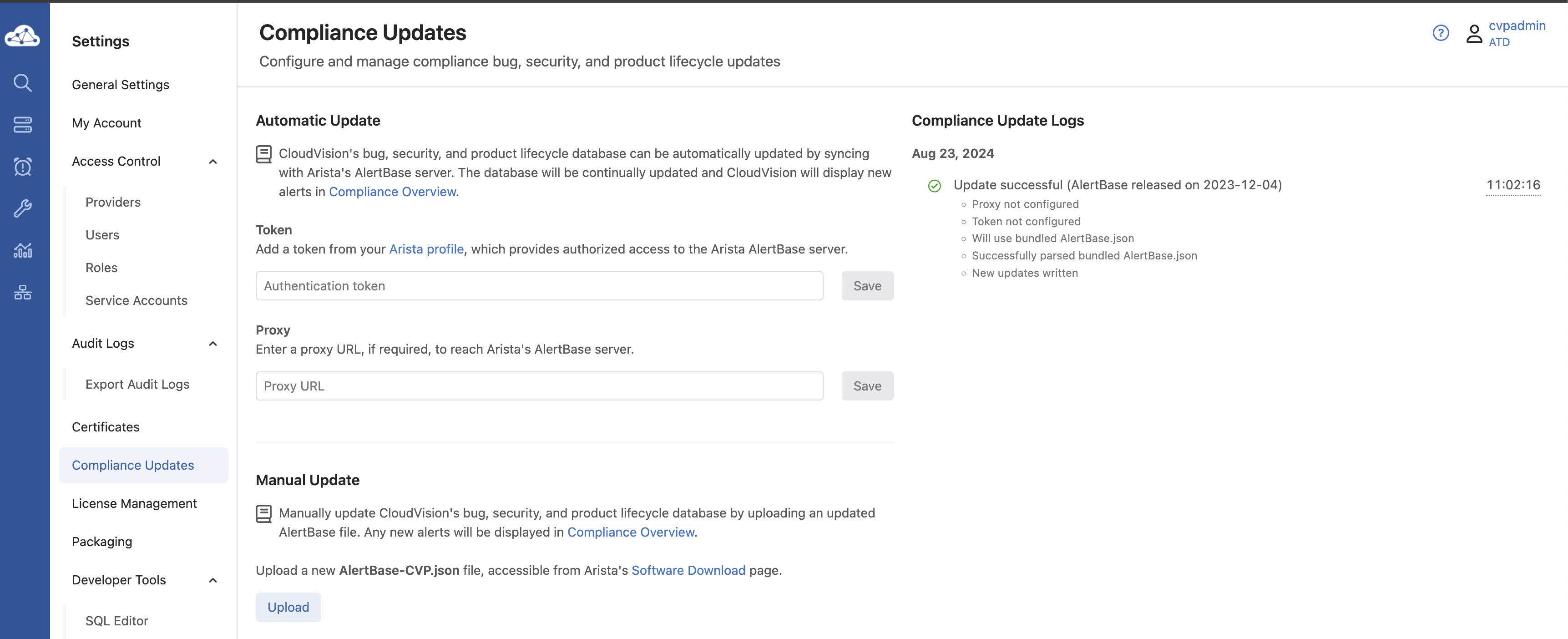

Back in CVP, click on the Gear icon in the lower left, then select Compliance Updates on the left. Paste the Token that was copied from arista.com and click Save.

ATD Error

This step will error in the ATD environment, but on a standard deployment, where the CVP server can reach the internet, it will complete successfully.

The manual way of updating the bug database is as follows.

-

Click here to browse to Arista.com

-

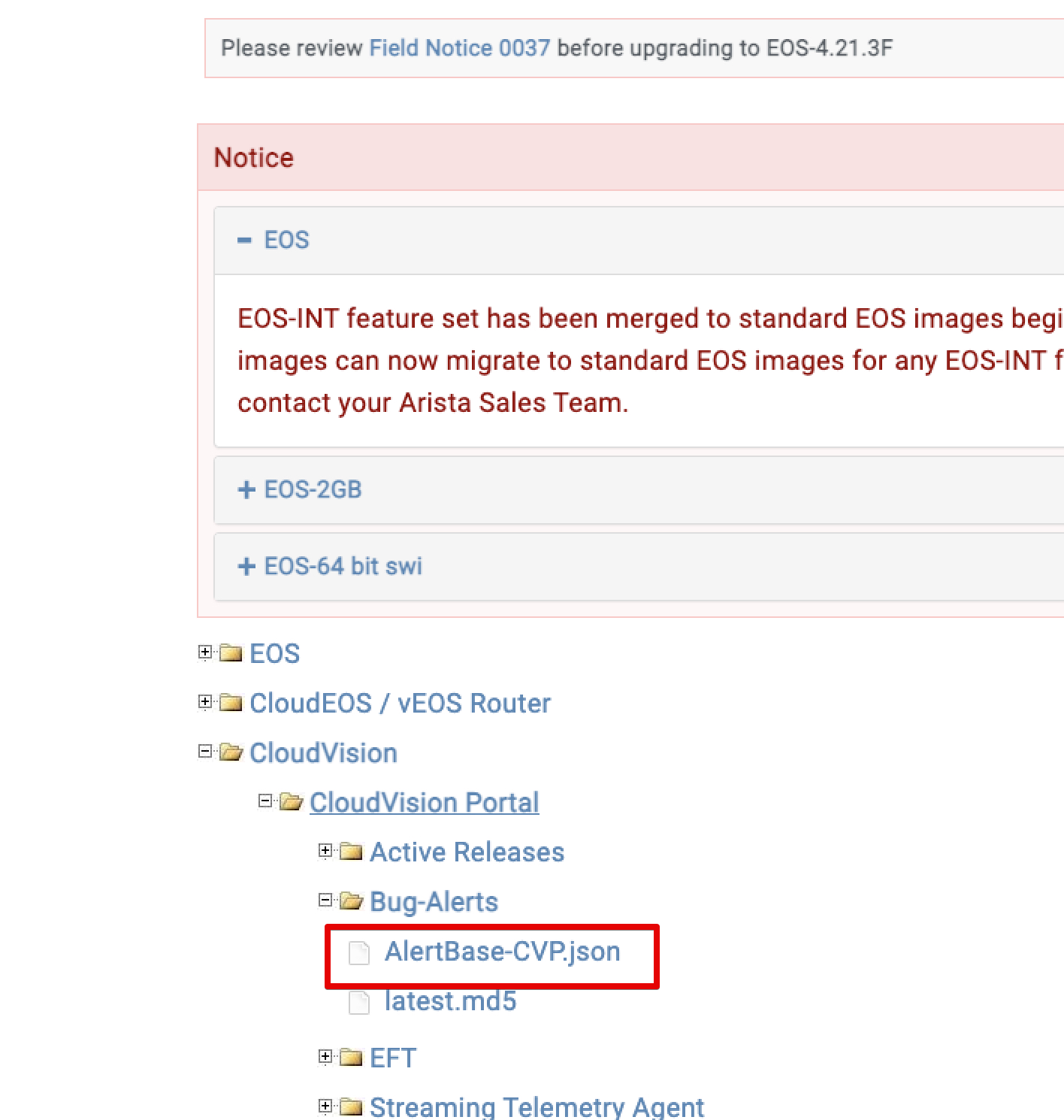

Click on Support > Software Downloads and browse to CloudVision > CloudVision Portal > Bug Alerts

-

Download the

AlertBase-CVP.jsonfile (as seen in the screenshot below)

Best Practice

Arista recommends a multinode setup (3 node) for on-prem deployments. For this lab, however, we deployed a singlenode installation to preserve cloud resources. A multinode install is exactly the same as the singlenode setup, you would just repeat the same steps for the secondary and tertiary nodes.

Success

Lab Complete!