Day 2 Operations¶

Our Campus fabric is working great. But, before too long, it will be time to change our configurations. Lucky for us, that time is today!

Leaf-2A Tagging¶

The first thing we will take care of with our Day 2 Operations is the tagging issue causing leaf-2a and IDF2 to not show up in our Campus Hierarchy view.

We can check if leaf-2a is inheriting the campus_access_pod key.

ansible-inventory -i sites/campus_a/inventory.yml --host leaf-2a | jq '.l2leaf.node_groups[] | select(.group == "IDF2")'

{

"filter": {

"tags": [

"IDF2_DATA",

"IDF2_VOICE"

]

},

"group": "IDF2",

"mlag": false,

"nodes": [

{

"id": 3,

"mgmt_ip": "192.168.0.16/24",

"name": "leaf-2a",

"uplink_interfaces": [

"Ethernet1/1",

"Ethernet2/1"

],

"uplink_switch_interfaces": [

"Ethernet4",

"Ethernet4"

]

}

]

}

We can run our filter command against a node group we know has inherited the correct key.

ansible-inventory -i sites/campus_a/inventory.yml --host leaf-2a | jq '.l2leaf.node_groups[] | select(.group == "IDF1")'

{

"campus_access_pod": "IDF1",

"filter": {

"tags": [

"IDF1_DATA",

"IDF1_VOICE"

]

},

"group": "IDF1",

"nodes": [

{

"id": 1,

"mgmt_ip": "192.168.0.14/24",

"name": "leaf-1a",

"uplink_interfaces": [

"Ethernet49"

],

"uplink_switch_interfaces": [

"Ethernet3"

],

"uplink_switches": [

"spine-1"

]

},

{

"id": 2,

"mgmt_ip": "192.168.0.15/24",

"name": "leaf-1b",

"uplink_interfaces": [

"Ethernet49"

],

"uplink_switch_interfaces": [

"Ethernet3"

],

"uplink_switches": [

"spine-2"

]

}

]

}

We can see that leaf nodes in the IDF1 group are correctly inheriting the campus_access_pod key but not IDF2.

Below is a snippet of our fabric definition for groups IDF1 and IDF2, see if you can spot the missing link.

l2leaf:

node_groups:

- group: IDF1

filter:

tags: [ "IDF1_DATA", "IDF1_VOICE" ]

campus_access_pod: IDF1

nodes:

- name: leaf-1a

id: 1

mgmt_ip: 192.168.0.14/24

uplink_interfaces: [ Ethernet49 ]

uplink_switches: [ spine-1 ]

uplink_switch_interfaces: [ Ethernet3 ]

- name: leaf-1b

id: 2

mgmt_ip: 192.168.0.15/24

uplink_interfaces: [ Ethernet49 ]

uplink_switches: [ spine-2 ]

uplink_switch_interfaces: [ Ethernet3 ]

- group: IDF2

filter:

tags: [ "IDF2_DATA", "IDF2_VOICE" ]

mlag: false

nodes:

- name: leaf-2a

id: 3

mgmt_ip: 192.168.0.16/24

uplink_interfaces: [ Ethernet1/1, Ethernet2/1 ]

uplink_switch_interfaces: [ Ethernet4, Ethernet4 ]

We can see that IDF2 is missing the campus_access_pod key definition.

l2leaf:

node_groups:

- group: IDF1

filter:

tags: [ "IDF1_DATA", "IDF1_VOICE" ]

campus_access_pod: IDF1

nodes:

- name: leaf-1a

id: 1

mgmt_ip: 192.168.0.14/24

uplink_interfaces: [ Ethernet49 ]

uplink_switches: [ spine-1 ]

uplink_switch_interfaces: [ Ethernet3 ]

- name: leaf-1b

id: 2

mgmt_ip: 192.168.0.15/24

uplink_interfaces: [ Ethernet49 ]

uplink_switches: [ spine-2 ]

uplink_switch_interfaces: [ Ethernet3 ]

- group: IDF2

filter:

tags: [ "IDF2_DATA", "IDF2_VOICE" ]

campus_access_pod: IDF2

mlag: false

nodes:

- name: leaf-2a

id: 3

mgmt_ip: 192.168.0.16/24

uplink_interfaces: [ Ethernet1/1, Ethernet2/1 ]

uplink_switch_interfaces: [ Ethernet4, Ethernet4 ]

We can verify that leaf-2a is now inheriting the correct key.

ansible-inventory -i sites/campus_a/inventory.yml --host leaf-2a | jq '.l2leaf.node_groups[] | select(.group == "IDF2")'

{

"campus_access_pod": "IDF2",

"filter": {

"tags": [

"IDF2_DATA",

"IDF2_VOICE"

]

},

"group": "IDF2",

"mlag": false,

"nodes": [

{

"id": 3,

"mgmt_ip": "192.168.0.16/24",

"name": "leaf-2a",

"uplink_interfaces": [

"Ethernet1/1",

"Ethernet2/1"

],

"uplink_switch_interfaces": [

"Ethernet4",

"Ethernet4"

]

}

]

}

Now that we have modified our inventory to map to our new YAML data model for leaf-2a, we can build the configs to generate the new tags.

Then, deploy our changes through CloudVision. In this scenario, there will be no configuration changes, so there should be no change control, however, since we have adjusted the tags for leaf-2a, CloudVision will update those tags in studios on the back end for you.

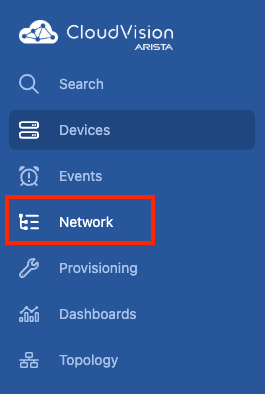

Lets check our work in the Campus Network Hierarchy IDF view. In the left CloudVision menu, click on Network.

We should now see IDF2 with our leaf-2a node in it.

Additionally, if we browse back over to the Campus Health Overview dashboard, and click Access Interface Configuration, we should be able to select IDF2 from the dropdown to configure.

Let's continue with that and configure the endpoint port for host-3, which connects on leaf-2a, Ethernet1, in VLAN 120.

After reviewing and confirming our changes, our host-3 should now have connectivity to our campus fabric.

Management Functions¶

Remember, this workshop showcases the ability for the infrastructure management piece of our fabric to be performed via AVD, without interfering with our quick operations of assigning endpoint ports via quick actions. In this next section, we will go back and add some configuration to our fabric via AVD.

Login Banner¶

When we initially deployed our multi-site topology, we should have included a login banner on all our switches. Let's take a look at the AVD documentation site to see what the data model is for this configuration.

The banner on all of our switches will be the same. After reviewing the AVD documentation, we know we can accomplish this by defining the banners input variable

in our global_vars/global_campus_vars.yml file. We will also accomplish this by assigning banners the prefix of custom_structured_configuration. The prefix denotes the use of eos_cli_config_gen models vs the abstracted eos_designs data models

Add the code block below to global_vars/global_campus_vars.yml.

# Login Banner

custom_structured_configuration_banners:

motd: |

You shall not pass. Unless you are authorized. Then you shall pass.

EOF

Yes, that "EOF" is important!

Ensure the entire code snippet above is copied; including the EOF. This must be present for the configuration to be

considered valid

Next, let's build the configurations and documentation associated with this change.

Please take a minute to review the results of our five lines of YAML and the configuration changes on the devices.

Next, we have some more work to do before deploying our changes.

Syslog Server¶

Our next Day 2 change is adding a syslog server configuration to all of our switches. Once again, we'll take a look at the logging_settings within the management settings data models.

Like our banner operation, the syslog server configuration will be consistent on all our switches. Because of this, we can also put this into our global_vars/global_campus_vars.yml file.

Add the code block below to global_vars/global_campus_vars.yml.

Finally, let's build our configurations.

Take a minute, using the source control feature in VS Code, to review what has changed as a result of our work.

At this point, we have our Banner and Syslog configurations in place. The configurations look good, and we're ready to deploy our changes.

CV Deploy

After running the deploy command with AVD, remember to hop back into CloudVision and approve and execute the associated change control.

Once completed, we should see our banner when logging into any switch. The output of the show logging command should also have our newly defined syslog servers.

Campus Wide Network Service¶

For this last piece of Day 2 Operations, we have a new endpoint that we need to configure our fabric for. This endpoint, host-6, is part of a new campus wide security system. As such, the VLAN it lives on needs to be configured and pushed out to all leafs in our fabric, not just IDF3 alone. This part will showcase the ability to manipulate the tags we are using in network services to pick how we prune VLANs.

First, we will want to add the necessary data model to the CAMPUS_A_NETWORK_SERVICES.yml file. You can copy the data model below and paste it into the file at line 73, just after the last entry.

- id: 1000

name: 'SECURITY'

tags: [ "IDF1_DATA", "IDF2_DATA", "IDF3_DATA" ]

enabled: true

ip_virtual_router_addresses:

- 10.100.100.1

nodes:

- node: spine-1

ip_address: 10.100.100.2/24

- node: spine-2

ip_address: 10.100.100.3/24

Notice how we are calling all of the IDFx_DATA tags. This allows us to maintain the separation of the IDF specific VLANs, while allowing this new VLAN to be configured and deployed everywhere.

After adding this data model, and making sure all the indentation is correct and matches, build the configs.

Take a minute to review the results of our additional YAML and the configuration changes on the devices for the new VLAN service.

After reviewing, deploy the changes to the fabric via CloudVision.

After deploying the changes, we can login into spine-1 and verify the vlan is there and trunked with the following commands:

Finally, lets check to make sure it was propagated all the way down to our IDF3 member leafs. From member-leaf-3e, check the VLAN database with:

Endpoint Configuration¶

Now that we have deployed our new network service via AVD, we can go back to the Access Interface Configuration quick action, and configure the endpoint port that our new host, host-6 connects to.

Head back over to Dashboards, and the Campus Health Overview dashboard.

In the Quick Actions section, click Access Interface Configuration.

Next, lets select our Campus, Cleveland, our Campus Pod, Campus A, and IDF3.

Select port Ethernet1 on member-leaf-3e, and configure it for our new VLAN, 1000.

Finally, select Review, to review our configuration changes, and Confirm to deploy our new changes.

Once the changes are complete, we can verify our new host has connectivity by accessing the CLI of host-6, and attempting to ping its gateway.

PING 10.100.100.1 (10.100.100.1) 72(100) bytes of data.

80 bytes from 10.100.100.1: icmp_seq=1 ttl=63 time=30.2 ms

80 bytes from 10.100.100.1: icmp_seq=2 ttl=63 time=29.5 ms

80 bytes from 10.100.100.1: icmp_seq=3 ttl=63 time=28.8 ms

80 bytes from 10.100.100.1: icmp_seq=4 ttl=63 time=24.8 ms

80 bytes from 10.100.100.1: icmp_seq=5 ttl=63 time=26.2 ms

Great Success!

Congratulations. You have now successfully completed initial fabric builds and day 2 operational changes, utilizing both AVD and CloudVision, without interacting with any switch CLI!